I just sent a pile of updates to Princeton. They will probably be added to the site on the 2nd.

The index page has been re-written to include pointers to some of the major sources used for the material on the site.

An Intermittent Problems page has been added to describe how to deal with intermittent problems.

The mdb, dtrace and Disk I/O pages have been rewritten and expanded. The adb and mdb pages were merged to reflect the fact that Solaris 7 is really a dead OS at this point in time. The Disk I/O page was updated to reflect current Solaris 10 information from Solaris Internals and Solaris Performance and Tools by McDougall, Mauro and Gregg, and especially to include pointers to the really cool tools on the DTrace Toolkit page.

The kstat page has been updated to provide some additional information, and the netstat page has been changed to reflect the death of netstat -k.

The next major effort for the site will be an expansion of the zones page. (The current page is really not much more than a placeholder to avoid dead links on the other pages that refer to zones.)

I am also working on a root cause analysis page. I am finding that this page is involving a lot of reading of business publications; the business community seems to be way ahead of us on thinking about this issue.

--Scott

Labels

- news (101)

- Linux (72)

- tips (36)

- ubuntu (32)

- hardware (24)

- videos (24)

- howtos (21)

- kde (20)

- open source (20)

- solaris (18)

- solaris interview questions (18)

- external links (10)

- fedora (10)

- windows (10)

- debian (8)

- kernel (8)

- solaris interview questions and answers (8)

- MCSE Videos (6)

- commands (6)

- sun (6)

- linus torvalds (5)

- Sun Solaris 10 CBT (4)

- network administration (4)

- web design (4)

- solaris-express (3)

- backup (2)

- virtualization (1)

Updates for mdb, dtrace and diskio pages

New Year 2007 - The year of GNU/Linux

The inherent strength of GNU/Linux lies in the fact that all the configuration pertaining to the OS is saved in liberally commented text files which reside in a specific location. And almost all actions executed by the OS is logged in the appropriate files which are also plain text files. For example, reading the file /var/log/messages will reveal a wealth of knowledge about the actions carried out by the OS and the errors if any during boot-up. So once the initial learning curve is overcome, it becomes a joy to work in GNU/Linux.

I am not trying to disparage Microsoft but when you have a fabulous choice in GNU/Linux which comes with an unbeatable price tag (Free) and if you are able to do almost all your tasks in GNU/Linux baring say playing some of your favorite games, why would you consider buying another OS paying hundreds of dollars ? More over if you are an avid gaming enthusiast, you should rather be buying a Sony PlayStation or a Nintendo Wii or even an XBox and not an OS.

There was a time when I used to boot into Windows to carry out certain tasks. But for the past many months, I have realized that I am able to do all my tasks from within GNU/Linux itself and it has been some time now since I have booted into Windows.

On this positive note, I wish you all a very happy and prosperous New Year.

mdb and kmdb pages

I've submitted the following new pages to Princeton for inclusion: Intermittent Problems, mdb and kmdb. I also made significant improvements to the dtrace page.

A great collection of repositories for Open SuSE Linux

Labels: repositories, SuSE

dtrace, methodology, SMF

I've submitted pages on

general methodology, dtrace and SMF. Depending on Princeton's work schedule, they may not be up until after the holidays.

KVM Virtualization solution to be tightly integrated with Linux kernel 2.6.20

Updated Load Average and ZFS Tuning

I've posted updates to the discussion of Load Averages and ZFS Tuning.

25 Shortcomings of Microsoft Vista OS - A good reason to choose GNU/Linux ...

- Vista introduces a new variant of the SMB protocol - (I wonder what is the future of Samba now...)

- Need significant hardware upgrades

- No anti-virus bundled with Vista

- Many third party applications still not supported

- Your machine better have a truck load of Memory - somewhere around 2 GB. (Linux works flawlessly with just 128 MB... even less).

- Too many Vista editions.

- Need product activation. (Now that is something you will never see in Linux).

- Vista OS will take over 10 GB of hard disk space. (With Linux you have a lot of flexibility with respect to the size of the distribution.).

- Backing up the desktop will take up a lot of space. (Not so in Linux)

- No must have reasons to buy Vista. (The fact that Linux is Free is reason enough to opt for it)

- Is significantly different from Windows XP and so there is a learning curve. (Switching to Linux also involves some learning curve but then it is worth it as it doesn't cost you much and in the long run, you have a lot to gain).

- You'd better come to terms with the cost of Vista - it is really exorbitant running to over $300. (In price, Vista can't beat Linux which is free as in beer and Freedom).

- Hardware vendors are taking their own time to provide support for Vista.(Now a days, more and more hardware vendors are providing support for Linux).

- Vista's backup application is more limited than Windows XP's. (Linux has a rich set of backup options and every one of them is free).

- No VoIP or other communication applications built in. (Skype, Ekiga... the list goes on in Linux).

- Lacks intelligence and forces users to approve the use of many native applications, such as a task scheduler or disk defragmenter. (Linux is flexible to a fault).

- Buried controls - requiring a half a dozen mouse clicks. (Some window managers in Linux also have this problem but then here too, you have a variety of choice to suit your tastes).

- Installation can take hours, upgrades even more. (Barring upgrades, installation of Linux will take atmost 45 minutes. Upgrades will take a little longer).

- Little information support for Hybrid hard drives.

- 50 Million lines of code - equates to countless undiscovered bugs. (True, true... It is high time you switch to Linux).

- New volume-licensing technology limits installations or requires dedicated key-management servers to keep systems activated. (Linux users do not have this headache I believe).

- Promises have remained just that - mere promises. A case to the point being WinFS, Virtual folders and so on. - (Clever marketing my friend, to keep you interested in their product).

- Does not have support for IPX, Gopher, WebDAV, NetDDE and AppleTalk. (Linux has better support for many protocols which Windows do not support).

- Wordpad's ability to open .doc files have been removed. (Now that is what I call extinguishing with style. OpenOffice.org which is bundled with most Linux distributions can open, read and write DOC files).

Labels: opinionated articles

SysAdmin article

An expanded and rewritten version of the Resource Management page has been tentatively accepted by SysAdmin as for its April 2007 issue.

Updated pages

I've submitted some updated pages for Resource Management, ZFS and Scheduling.

I also added a beginning of a page on Zones.

--Scott

Various ways of detecting rootkits in GNU/Linux

Detecting rootkits on your machine running GNU/Linux

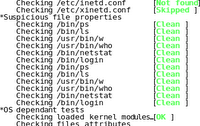

I know of two programs which aid in detecting whether a rootkit has been installed on your machine. They are Rootkit Hunter and Chkrootkit.

Rootkit Hunter

Once installed, you can run rootkit hunter to check for any rootkits infecting your computer using the following command:

# rkhunter -c- MD5 tests to check for any changes

- Checks the binaries and system tools for any rootkits

- Checks for trojan specific characteristics

- Checks for any suspicious file properties of most commonly used programs

- Carries out a couple of OS dependent tests - this is because rootkit hunter supports multiple OSes.

- Scans for any promiscuous interfaces and checks frequently used backdoor ports.

- Checks all the configuration files such as those in the /etc/rc.d directory, the history files, any suspicious hidden files and so on. For example, in my system, it gave a warning to check the files /dev/.udev and /etc/.pwd.lock .

- Does a version scan of applications which listen on any ports such as the apache web server, procmail and so on.

On my machine, the scanning took 175 seconds. By default, rkhunter conducts a known good check of the system. But you can also insist on a known bad check by passing the '--scan-knownbad-files' option as follows :

# rkhunter -c --scan-knownbad-files # rkhunter --update59 23 1 * * echo "Rkhunter update check in progress";/usr/local/bin/rkhunter --updateChkrootkit

# chkrootkit -l# chkrootkit -x

Labels: slashdotted

FSF starts campaign to enlighten computer users against Microsoft's Vista OS

John Sullivan, the FSF program administrator has aptly put it as thus :

Vista is an upsell masquerading as an upgrade. It is an overall regression when you look at the most important aspect of owning and using a computer: your control over what it does. Obviously MS Windows is already proprietary and very restrictive, and well worth rejecting. But the new 'features' in Vista are a Trojan Horse to smuggle in even more restrictions. We'll be focusing attention on detailing how they work, how to resist them, and why people should care.

RPM to be revitalized - courtesy of Fedora Project

Perhaps the need of the hour is that all Linux distributions support a universal package format with all packages residing in a central repository, which can be shared by all Linux distributions alike. But this scenario looks bleak with Debian having its own dpkg format and Red Hat based distributions having their own RPM based package formats. Atleast there is going to be better inter operability with different Red Hat based Linux distributions in the future as one of the goals of this new project is to work towards a shared code base between SuSE, Mandrake, Fedora and so on. At present, a lot of work in creating packages and maintaining repositories is being repeated over and over. But Fedora's decision breathes new life in the future of RPM and one can hope to see RPM morph into a more efficient, robust package manager with lesser bugs.

- Give RPM a full technical review and work towards a shared base.

- Make RPM a lot simpler.

- Remove a lot of existing bugs in the RPM code base.

- Make it more stable.

- Enhance the RPM-Python bindings thus bringing greater interoperability between Python programs and RPM.

Sun Microsystems - doing all it can to propagate its immense software wealth

Taking all these events into consideration, Sun is doing everything in its power to ensure that the fruits of its hard work lives on and gains in popularity. A few days back when I visited Sun's website, I was surprised to see a link offering to send a free DVD media kit consisting of the latest build of Solaris 10 and Sun Studio 11 software to the address of ones choice. I have always believed that one of the reasons for Ubuntu to gain so much popularity was because of its decision to ship free CDs of its OS. Perhaps taking a leaf from Ubuntu, Sun has also started shipping free DVDs of Solaris 10 OS to anybody who want a copy of the same - a sure way of expanding its community.

Labels: opinionated articles, solaris

Travails of adding a second hard disk in a PC running Linux

ZFS and Resource Pools

Additional pages for ZFS and Resource Pools have been submitted. The scheduler page has been expanded.

Sources for the ZFS page include the following:

Solaris ZFS Administration Guide

Brune, Corey, ZFS Administration, SysAdmin Magazine Jan 2007

Humor - Get your ABC's of Linux right

A is for awk, which runs like a snail, and

B is for biff, which reads all your mail.

C is for cc, as hackers recall, while

D is for dd, the command that does all.

E is for emacs, which rebinds your keys, and

F is for fsck, which rebuilds your trees.

G is for grep, a clever detective, while

H is for halt, which may seem defective.

I is for indent, which rarely amuses, and

J is for join, which nobody uses.

K is for kill, which makes you the boss, while

L is for lex, which is missing from DOS.

M is for more, from which less was begot, and

N is for nice, which it really is not.

O is for od, which prints out things nice, while

P is for passwd, which reads in strings twice.

Q is for quota, a Berkeley-type fable, and

R is for ranlib, for sorting ar table.

S is for spell, which attempts to belittle, while

T is for true, which does very little.

U is for uniq, which is used after sort, and

V is for vi, which is hard to abort.

W is for whoami, which tells you your name, while

X is, well, X, of dubious fame.

Y is for yes, which makes an impression, and

Z is for zcat, which handles compression.

Labels: humor

Ishikawa and Interrelationship Diagrams

I've been working on a page including information on some formal troubleshooting methods. En route, I have been looking at Cause-and-Effect (Ishikawa fishbone) diagrams and Interrelationship Diagrams.

Here are some of the noteworthy web pages I've been looking at:

Concordia: Cause and Effect Diagram and

Concordia Interrelationship Diagram provide a nice introduction to the two types of diagrams.

HCI Cause and Effect Diagram provides a slightly longer article, including some historical informaton about Ishikawa diagrams.

balancedscorecard.org Cause and Effect Diagram provides a much more in-depth view of Ishikawa diagrams.

questlearningskills.org Interrelationship Diagrams provides a howto level article about Interrelationship diagrams.

ASQ Interrelationship Diagrams provides a slightly longer article about Interrelationship Diagrams.

Root Cause Analysis: A Framework for Tool Selection provides a nice comparison of Ishikawa and Interrelationship diagrams, as well as Current Reality diagrams.

Trolltech's Qtopia Greenphone

Specifications of the Qtopia Greenphone

The hardware consists of the following:

- Touch-screen and keypad UI

- QVGA® LCD color screen

- Marvell® PXA270 312 MHz application processor

- 64MB RAM & 128MB Flash

- Mini-SD card slot

- Broadcom® BCM2121 GSM/GPRS baseband processor

- Bluetooth® equipped

- Mini-USB port

- 512 MB RAM

- 2.2 GB HDD space and

- 1 GHz processor

Resource Management resources

I've been looking at documentation on Resource Management over the last few days. Here are some of the articles that I have found. Unfortunately, much of the information I found is based on the Solaris 9 and even Solaris 8 implementations of Resource Manager, which is only somewhat useful when looking at Solaris 10.

If you are aware of additional resources, please feel free to add them to the comments on this post.

Here are the best items I've found:

System Administration Guide: Solaris Containers-Resource Management and Solaris Zones from the Solaris 10 documentation. This is quite well-written, though organized differently than I would have done it.

The Sun BluePrints Guide to Solaris Containers by Foxwell, Lageman, Hoogeveen, Rozenfeld, Setty and Victor. The Resource Management section is also quite well-written, and I found the organization to be more helpful than the manual in the Solaris 10 docs.

Solaris Resource Management by Galvin in SysAdmin. This is a high-level introduction. Though it is specific to Solaris 9, it is still the best quick introduction to the subject I've come across.

Capping a Solaris processes memory by matty is a blog page describing the ability of Solaris 10 to use rcapd to manage memory. This is a brief but thorough discussion of this topic.

FizzBall - A well designed enjoyable game for Linux

- Which baby animal can be called a kid? Goat

- A group of these animals can be called a Mob. - I forgot the answer ;-)

- A group of these animals can be called a pride. Lions

- Which baby animal can be called a gosling ? Goose

- Which animal's baby can be called a snakelet ? snake

- A group of these animals can be called a Parliament. Owl

FizzBall game features

- Over 180 unique levels of game play.

- The game stage is automatically saved once you exit the game and you can continue where you left off the next time you start playing.

- Multiple users can be created and each user's game is saved separately.

- There are two modes - Regular mode and Kids mode. The kids mode does not allow you to lose the balls and includes fun quizzes between levels.

- If you lose all your bubbles, you can still continue with the game, though all your scores will be canceled.

- Get trophys for achieving unique feats. For example, I recieved a trophy for capturing an alien without getting hit by a laser :-) .

Pros of the game

- Eye catching design and excellent graphics.

- Is educative for little kids as well as entertaining for all ages.

- Over 180 levels in both the regular and kids mode of the game.

Is not released under GPL, with the full version of the game costing USD $19.95. A time limited demo version of the game is available though for trying out before buying. But having played the full game, I would say that the money is well spent.

The good news is, professional game developers are seriously eyeing the Linux OS alongside Windows as a viable platform to release their games, FizzBall being a case to the point.

Labels: games

Introduction

This blog is designed to be a companion to my Solaris Troubleshooting web site, hosted by Princeton University.

I used to have an email link to solicit feedback on the web site. I received some outstanding feedback, but I also received an outstanding amount of spam.

I am in the process of updating the site to include more Solaris 10 specific information, especially with regards to Resource Management and dtrace. I've posted a first cut at a Resource Management page.

Thanks to everyone who contributed to the old Solaris 8 site, and a special thanks to Princeton University for continuing to host the site long after I no longer worked on their Unix team.

--Scott Cromar

Richard M Stallman talks on GPL version 3 at the 5th International GPLv3 Conference in Japan

Labels: Richard Stallman

Making the right decisions while buying a PC

The gist of his choice filters down to the following:

- ATX tower case - is capable of holding a full size motherboard with space for several optical drives and is ideal for home users and gaming enthusiasts.

- CPU - As of now Intel core duo provides the best power-performance-price ratio. Enough applications have been optimized for dual-core chips that these should be considered for any moderate to heavy use, especially when multitasking.

- Always go for motherboards that have the PCI Express slots over the now fast becoming outdated ordinary PCI slots.

- And with respect to memory (RAM), your best bet is to go for atleast DDR-400 and above though ideally DDR2-800 is recommended. And don't even think of a machine with less than 512 MB RAM. The article strongly recommends a choice of 2 GB memory if you can afford it as near future applications and OSes will demand that much memory.

- On the storage front, if you are in the habit of archiving video or hoarding music on your hard disk, do consider atleast a hard disk of 150 GB. The article recommends Western Digital's Raptor 150 GB drives if you are on the look out for better performance and Seagate Barracuda 750 GB for those on the look out for larger capacity drives. Both are costly though.

- And do go for a DVD writer over a CD-RW/DVD combo.

Labels: hardware

A peep into how Compact Discs are manufactured

Update (Feb 14th 2007): The Youtube video clip embedded here has been removed as I have been notified by its real owners that the video clip is copyrighted.

Ifconfig - dissected and demystified

# ifconfig eth0 192.168.0.1 netmask 255.255.255.0 broadcast 192.168.0.255 up

eth0 Link encap:Ethernet HWaddr 00:70:40:42:8A:60

inet addr:192.168.0.1 Bcast:192.168.0.255 Mask:255.255.255.0

UP BROADCAST NOTRAILERS RUNNING MULTICAST MTU:1500 Metric:1

RX packets:160889 errors:0 dropped:0 overruns:0 frame:0

TX packets:22345 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:33172704 (31.6 MiB) TX bytes:2709641 (2.5 MiB)

Interrupt:9 Base address:0xfc00

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:43 errors:0 dropped:0 overruns:0 frame:0

TX packets:43 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:3176 (3.1 KiB) TX bytes:3176 (3.1 KiB)

- Link encap:Ethernet - This denotes that the interface is an ethernet related device.

- HWaddr 00:70:40:42:8A:60 - This is the hardware address or MAC address which is unique to each ethernet card which is manufactured. Usually, the first half part of this address will contain the manufacturer code which is common for all the ethernet cards manufactured by the same manufacturer and the rest will denote the device Id which should not be the same for any two devices manufactured at the same place.

- inet addr - indicates the machine IP address

- Bcast - denotes the broadcast address

- Mask - is the network mask which we passed using the netmask option (see above).

- UP - This flag indicates that the kernel modules related to the ethernet interface has been loaded.

- BROADCAST - Denotes that the ethernet device supports broadcasting - a necessary characteristic to obtain IP address via DHCP.

- NOTRAILERS - indicate that trailer encapsulation is disabled. Linux usually ignore trailer encapsulation so this value has no effect at all.

- RUNNING - The interface is ready to accept data.

- MULTICAST - This indicates that the ethernet interface supports multicasting. Multicasting can be best understood by relating to a radio station. Multiple devices can capture the same signal from the radio station but if and only if they tune to a particular frequency. Multicast allows a source to send a packet(s) to multiple machines as long as the machines are watching out for that packet.

- MTU - short form for Maximum Transmission Unit is the size of each packet received by the ethernet card. The value of MTU for all ethernet devices by default is set to 1500. Though you can change the value by passing the necessary option to the ifconfig command. Setting this to a higher value could hazard packet fragmentation or buffer overflows. Do compare the MTU value of your ethernet device and the loopback device and see if they are same or different. Usually, the loopback device will have a larger packet length.

- Metric - This option can take a value of 0,1,2,3... with the lower the value the more leverage it has. The value of this property decides the priority of the device. This parameter has significance only while routing packets. For example, if you have two ethernet cards and you want to forcibly make your machine use one card over the other in sending the data. Then you can set the Metric value of the ethernet card which you favor lower than that of the other ethernet card. I am told that in Linux, setting this value using ifconfig has no effect on the priority of the card being chosen as Linux uses the Metric value in its routing table to decide the priority.

- RX Packets, TX Packets - The next two lines show the total number of packets received and transmitted respectively. As you can see in the output, the total errors are 0, no packets are dropped and there are no overruns. If you find the errors or dropped value greater than zero, then it could mean that the ethernet device is failing or there is some congestion in your network.

- collisions - The value of this field should ideally be 0. If it has a value greater than 0, it could mean that the packets are colliding while traversing your network - a sure sign of network congestion.

- txqueuelen - This denotes the length of the transmit queue of the device. You usually set it to smaller values for slower devices with a high latency such as modem links and ISDN.

- RX Bytes, TX Bytes - These indicate the total amount of data that has passed through the ethernet interface either way. Taking the above example, I can fairly assume that I have used up 31.6 MB in downloading and 2.5 MB uploading which is a total of 37.1 MB of bandwidth. As long as there is some network traffic being generated via the ethernet device, both the RX and TX bytes will go on increasing.

- Interrupt - From the data, I come to know that my network interface card is using the interrupt number 9. This is usually set by the system.

Learning to use the right command is only a minuscule part of the job of a network administrator. The major part of the job is in analyzing the data returned by the command and arriving at the right conclusions.

Labels: howtos, system administration

LinuxBIOS - A truly GPLed Free Software BIOS

A few months back, I had posted an article related to BIOS which described its functions. A BIOS is an acronym for Basic Input Output System and is the starting point of the boot process in your computer. But one of the disadvantages of the proprietary BIOS which are embedded in most PCs is that there is a good amount of code which is used in it to support legacy operating systems such as DOS and the end result is a longer time taken to boot up and pass the control to the resident operating system.

A few months back, I had posted an article related to BIOS which described its functions. A BIOS is an acronym for Basic Input Output System and is the starting point of the boot process in your computer. But one of the disadvantages of the proprietary BIOS which are embedded in most PCs is that there is a good amount of code which is used in it to support legacy operating systems such as DOS and the end result is a longer time taken to boot up and pass the control to the resident operating system.- 100% Free Software BIOS (GPL)

- No royalties or license fees!

- Fast boot times (3 seconds from power-on to Linux console)

- Avoids the need for a slow, buggy, proprietary BIOS

- Runs in 32-Bit protected mode almost from the start

- Written in C, contains virtually no assembly code

- Supports a wide variety of hardware and payloads

- Further features: netboot, serial console, remote flashing, ...

Is Free Software the future of India? Steve Ballmer CEO of Microsoft answers...

Labels: slashdotted

Book Review: Ubuntu Hacks

I recently got hold of a very nice book on Ubuntu called Ubuntu Hacks co-authored by three authors - Kyle Rankin, Jonathan Oxer and Bill Childers. This is the latest of the hack series of books published by O'Reilly. They have made available a rough cut version of the book online ahead of schedule which was how I got hold of the book but as of now you can also buy the book in print. Put in a nutshell, this book is a collection of around 100 tips and tricks which the authors choose to call hacks, which explain how to accomplish various tasks in Ubuntu Linux. The so called hacks range from down right ordinary to the other end of the spectrum of doing specialised things.

I recently got hold of a very nice book on Ubuntu called Ubuntu Hacks co-authored by three authors - Kyle Rankin, Jonathan Oxer and Bill Childers. This is the latest of the hack series of books published by O'Reilly. They have made available a rough cut version of the book online ahead of schedule which was how I got hold of the book but as of now you can also buy the book in print. Put in a nutshell, this book is a collection of around 100 tips and tricks which the authors choose to call hacks, which explain how to accomplish various tasks in Ubuntu Linux. The so called hacks range from down right ordinary to the other end of the spectrum of doing specialised things.Book Specifications

Name : Ubuntu Hacks

Authors : Kyle Rankin, Jonathan Oxer and Bill Childers

ISBN No: 0-596-52720-9

No of pages: 447

Price : Check at Amazon.com or compare prices of the book.

Rating: Very good

Labels: book reviews, ubuntu

A list of Ubuntu/Kubuntu repositories

Labels: repositories, ubuntu

Learning to use netcat - The TCP/IP swiss army knife

For the server :

nc -l <port number >nc <server ip address> <port number>- You can transfer files by this method between remote machines.

- You can serve a file on a particular port on a machine and multiple remote machines can connect to that port and access the file.

- Create a partition image and send it to the remote machine on the fly.

- Compress critical files on the server machine and then have them pulled by a remote machine.

- And you can do all this securely using a combination of netcat and SSH.

- It can be used as a port scanner too by use of the -z option.